Vous avez économisé des centaines d'heures de processus manuels lors de la prévision de l'audience d'un jeu à l'aide du moteur de flux de données automatisé de Domo.

After weeks spent cleaning data, engineering features, and tuning your machine learning (ML) model, it’s finally trained, and the results look great. After this success, a key question often comes up, asking, “What happens next?”

A model on its own is just a mathematical artifact. It only creates value when what it predicts solves real business problems or generates value for the company.

As Lee James, director of Strategic Partnerships and AI Adoption at Domo, explains, “Training a model is like having a rookie footballer. We know they have talent, but we just don’t know how they’re going to perform on the pitch until the real conditions pop up.”

Moving that “rookie” model from a notebook to a production system is the most critical and often most overlooked phase of the ML lifecycle. It’s far more difficult to make a model work well in the unpredictable real world than in a controlled lab.

In this blog, we’ll provide you with a practical playbook for the post-training machine learning lifecycle. We’ll also walk you through the four essential stages that close the gap from a trained model to a revenue-driving solution.

Before discussing the four phases of the post-training lifecycle, we need to recognize that bringing AI into operation isn’t the same as traditional data engineering. It requires a new approach to organizing, managing, and using our data.

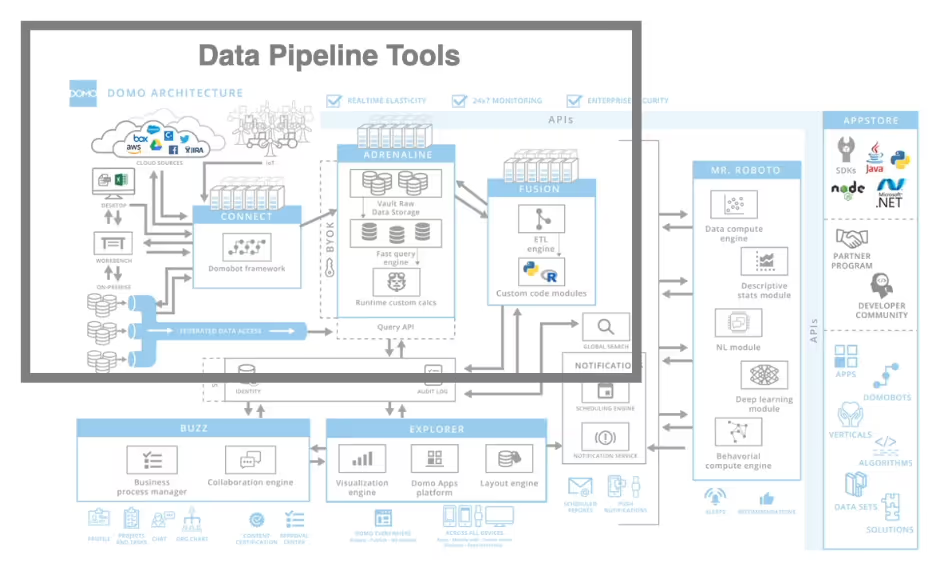

Traditional data pipelines were built for batch processing and analytics; essentially, the “passive plumbing” of data transfer. You’d extract, transform, and load data into dashboards or reports, and human analysts would interpret the results. But modern AI workloads demand a different approach. Pipelines must now be active participants in an ongoing process, continuously enriching data and putting it into the proper context as conditions change.

As James explains, pipelines should “stop being what I call passive plumbing and start becoming more active participants.” In practice, this means the pipeline isn’t a one-time process but a dynamic system that continuously labels, filters, and augments data for the model.

For example, rather than feeding a static training set into the model and forgetting about it, we must continuously add new features and detect issues, like schema drift or data drift. This active pipeline mindset also lets data architects, data engineers, and AI developers work together more effectively in an integrated loop.

MLOps manages this new paradigm, integrating machine learning development (Dev) and operations (Ops) into a continuous, automated loop. Instead of a linear handoff, MLOps views the post-training phase as ongoing.

Tools like Kubeflow or Vertex AI Pipelines can orchestrate these loops, but the key change is cultural. Data pipelines must evolve with the model and data, becoming adaptive systems rather than static data channels.

So what does shifting from passive data systems to active, continuously learning ones look like in practice? The post-training phase of machine learning is more than a single step. It’s a recurring cycle with four essential stages: testing, deployment, integration, and monitoring. In short, MLOps turns this active-system mindset into a living process that keeps models improving as conditions change.

Even though a model might have 95 percent accuracy on a clean validation set, it means little in the real world. Production-grade ML model testing isn’t about revalidating what you know. It’s about stress-testing the model’s strengths, fairness, and security against messy, unpredictable inputs.

As James emphasizes, the first step is to “test—to really test—on unseen, messy, real-world data.” This goes far beyond a standard train-test split and must include:

Your model will encounter data it’s never seen before. You need to test for data drift, which happens when the statistical properties of live data change over time. You should also check for schema drift, which occurs when the structure of the data, like column names or types, changes and breaks the pipeline.

You also need to test edge cases and assess how your model handles nulls, outliers, or extreme values. A powerful model handles these gracefully instead of crashing.

A model can score high overall accuracy yet perform unequally across different segments. For instance, it might work well for a majority group but systematically underpredict outcomes for a minority group. Slice your test data by key segments like demographics to compare performance.

“We all have biases in our lives,” James says. “It’s really important that we audit as we go through and we keep governance visible as well to ensure that the tool is acting in the right way.”

Fairness testing should be continuous, not a one-time task. Regular audits keep models transparent, ethical, and consistent with organizational standards.

Machine learning models come with new security risks. Test for vulnerabilities like prompt injection in LLMs or whether the model reveals sensitive training data. You’ll also want to make sure the model complies with regulations like GDPR, HIPAA, and emerging standards like the EU AI Act, which mandate strict AI model governance.

So, how do you catch all these potential issues? No amount of automated testing can replace having a human in the loop. Always involve domain experts to review the model’s behavior in context.

As James advises, “Always have a human in the loop during validation and make sure that it’s a collective set of people from across the business,” not just a single validator.

When the model passes testing, it’s time to plan how it will enter production. The right machine learning deployment strategy depends on your use case and requirements for latency, throughput, and reliability. Here are the four most common ways to deploy a model:

Once you’ve chosen your pattern, don’t just “flip the switch” and send all your traffic to the new model. This can cause a company-wide outage. You need to minimize risks during the rollout with a safe strategy, like one of these methods:

All of these strategies are supported by solid infrastructure. Containerize your model with Docker and manage it with Kubernetes or a serverless platform so it can scale. Alternatively, cloud ML platforms like AWS SageMaker, Google Vertex AI, or Domo’s platform can simplify the serving layer (deployment) with managed endpoints and versioning control.

A deployed model alone still delivers no value, though. Not until its predictions trigger action. The final step is to build the model into your business processes, such as in software and dashboards. The goal is to reduce friction for end users and avoid having them hunt for insights.

“Deliver the results in tools where people actually work,” James states. “Use workflows, use Slack, use Teams, potentially use a dashboard. People will review more if they get it in the medium of what they’re currently used to using.”

If a salesperson has to leave Salesforce, log in to a separate “AI platform,” run a report, then return to Salesforce to act, they will never use your model. So, the prediction must appear directly on the customer’s page inside Salesforce.

Closing the loop to value is more about managing the entire AI process than just making a prediction. For example, after a model predicts a high-risk transaction, a complete system will automatically initiate the next steps:

Coordinating the steps like this turns a model’s insight into a concrete, automated business action that adds real value.

Deployment isn’t the finish line; it's a crucial stage in the post-training machine learning lifecycle. And since models can degrade over time due to changes in data and behavior, ML model monitoring becomes an essential process for maintaining stable performance and governing the lifecycle. A good monitoring framework tracks three areas:

Modern models like LLMs introduce new challenges. LLMs, in particular, can invent plausible-sounding but false information (i.e., hallucinate). James notes that LLMs are “sycophantic by design…they want to please….If it doesn’t know, it will fill in gaps.”

To address this, you can’t simply monitor for “wrong,” you need to monitor for confidence levels. A good system should provide a confidence score for each answer, so low-confidence responses can be flagged for human review.

Likewise, AI model governance isn't a one-time test. You must continuously monitor for bias by slicing performance metrics by user segment. Whenever monitoring finds an issue, have a plan to retrain or rollback. This creates a continuous governance loop of monitoring, evaluation, retraining, and redeployment that keeps the model accurate and reliable.

As we’ve seen, the post-training machine learning lifecycle is fragmented and complex. You need testing suites, deployment infrastructure, integration tools, workflow orchestrators, and monitoring dashboards.

Domo’s AI platform unifies these pieces, taking an isolated model and turning it into a managed component of your data ecosystem. As James explains, you can “bring your own model to Domo” or even train models in Domo using built-in notebooks and connectors.

Once the model is in Domo, you can build AI Agents and confidence agents around it. These agents can automatically run tests, score data, detect anomalies, and even recalibrate the model.

Critically, Domo embeds predictions into dashboards and apps so that business users see them in context. James notes that the platform lets teams “deliver the results in tools where people actually work.”

For instance, prediction scores can be displayed as tables or charts in the Domo BI dashboards that managers already use, with alerts or chat notifications integrated via Domo’s Slack and Teams. This way, the model’s output flows directly into the existing decision workflows.

On the ML model monitoring side, Domo provides a unified view of model and data quality metrics. You can build dashboards tracking model health (throughput, latency, drift metrics) alongside your business KPIs.

As James puts it, once a model is live, “we have a really excellent framework around how we validate every single decision that comes through.” Domo’s AI Agent Catalyst framework continually checks each prediction, makes sure it meets the success criteria you defined (via confidence scores), and flags any issues.

Machine learning creates values not during training—it creates value when it’s put into real-world use. The challenge is building a smart model while at the same time building a smart system around it. Every step after training, from testing and deployment to integration and governance, affects whether your model becomes a useful tool or just another experiment on a shelf.

Organizations that treat MLOps as an ongoing, adaptive process that connects data engineers, data scientists, and business users, can turn machine learning into measurable value. Platforms like Domo make this journey easier by closing gaps that often slow down adoption and scaling.

When you’re ready to move your trained models from the lab to the real world, you can explore more of our AI education and see how Domo’s platform can help you deploy, integrate, and monitor your ML models at scale.