.png)

.png)

.png)

.png)

.webp)

Connect all your data and build pipelines faster.

Achieve flexible connectivity, effortless orchestration, and enterprise-grade governance, no matter your level of technical expertise.

Trusted by data-driven companies

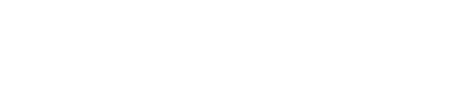

Architecture that scales

Hydrate your entire data ecosystem with Domo's flexible, reliable architecture. Easily ingest, prepare, and deliver governed data at scale, without ever sacrificing performance.

Connect to everything that matters

Connect to data wherever it lives—on-prem, in the cloud, or at the edge. With 1,000+ pre-built connections, both technical and business users can bring data together with built-in governance safeguards where IT retains full control and security is never compromised.

Rapid time-to-value with native connections to Salesforce, SAP, Google Analytics, and hundreds more.

Quickly ingest flat files, documents, and more with easy drag-and-drop tools without technical expertise needed.

Quickly create and publish custom connectors for any API not in the AppStore. The Connector IDE uses Domo’s orchestration, so you don’t have to manage hosting or code. Just build and connect.

Efficiently ingest data or write it back to your warehouse and source systems, supporting batch, micro-batch, and streaming use cases.

Use CLI tools and advanced automation to schedule, trigger, and monitor your data pipelines with less manual intervention.

Keep your data fresh on your schedule. Choose any ingestion cadence, from simple uploads to near real-time, and use flexible methods—upsert, partitioning, full replace, or append—to suit your needs.

Integrate data from over 1,000+ connectors

View all connectorsTake full control of your pipelines

Move fast without sacrificing reliability. Domo adapts to your operational requirements, letting you schedule pipelines on specific intervals, during defined windows, or via event-driven triggers.

Improve performance of your data pipelines by ingesting only what has changed, or re-sync your entire historical data on your schedule.

Support both push and pull models for maximum flexibility in synchronizing data across environments.

Run pipelines on precise intervals, during set windows, or on demand to meet business needs.

Automate data flows based on specific system activities or data changes with event-based updates.

Safely test changes in a versioned environment before promoting them to production, ensuring stability.

“It used to take days to go to each platform, pull all the data, combine and analyze it in a spreadsheet, and then get a report to a client. With Domo, we have preset dashboards that are automatically updated. All we need to do in the morning now is look at the data, add our insight, and send it to the client. What used to take 2.5 days now takes one hour."

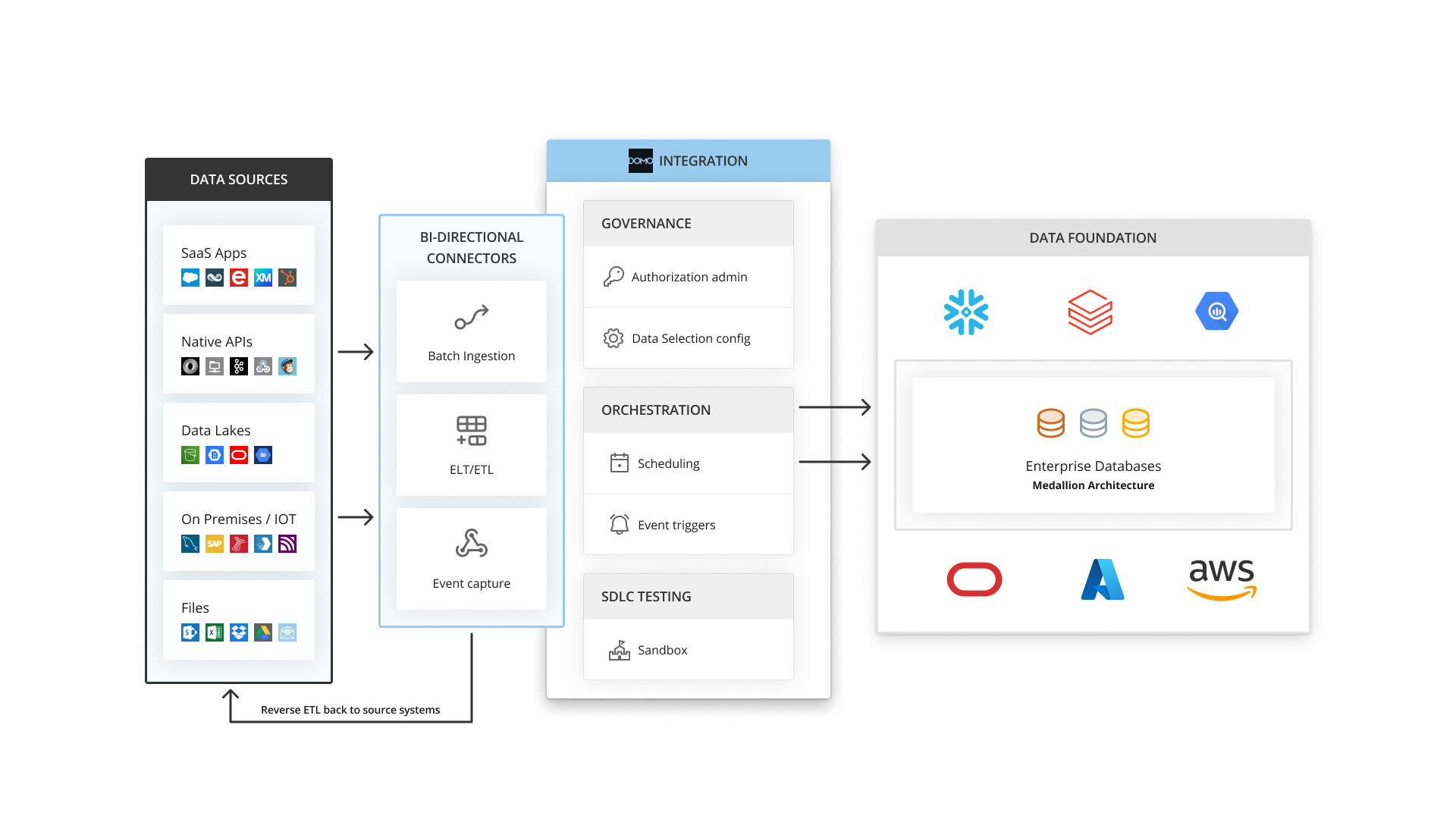

Built-in security and governance

Secure your most critical data. Governance isn’t bolted on, it’s built in. Domo empowers IT leaders to centralize management while giving business teams the access they need.

Protect data with encryption in transit and granular row-and-column-level permissions that can be inherited from your warehouse.

Automatically monitor for PII to surface potential risks before they become compliance issues.

Track lineage from source to destination and gain deep visibility into pipeline performance, usage, and impact with system-generated DomoStats.

Instantly notify teams of anomalies, failures, or unexpected changes so you can act before issues impact your business.

Control changes with built-in sandbox environments, allowing safe testing before updates go live.

Maintain a clear, tamper-proof record of data access, changes, and usage for compliance and peace of mind.

Trusted by industry experts

FAQ

We have answers

How does Domo handle secure access to on-premise data without complex firewall changes?

Security is a top priority. For on-premise data, Domo uses a lightweight, secure agent called Workbench that sits safely behind your firewall. It establishes an encrypted, outbound-only connection to the Domo cloud using standard HTTPS protocols. This design means you typically don't need to open inbound ports or manage complicated VPN tunnels, keeping your internal network secure while ensuring data flows reliably.

Who is responsible for maintaining pre-built connectors when source APIs change?

We handle the maintenance for you. A key benefit of our 1,000+ pre-built connectors is that our engineering team actively manages and updates them. When a third-party SaaS provider updates their API or changes authentication methods, we update the connector on our end. This ensures your data pipelines continue to run smoothly without requiring your team to rewrite code or troubleshoot broken integrations.

I have a niche, custom-built system. What are my options if a pre-built connector doesn't exist?

You have several flexible options. You can use our generic connectors (like ODBC, JDBC, SFTP, or various web-based APIs) to connect to almost any system. For simpler needs, file uploads or email-based ingestion are also available. For a fully integrated solution, your technical team can use the Connector IDE to build and publish a custom connector that leverages Domo’s native orchestration engine, just like our pre-built ones.

Can I filter or transform data before it's ingested to reduce load and storage?

Domo allows you to filter rows or columns at the time data is extracted from a source if the API provides this capability, or if you are using Workbench. This means you can bring in only the most relevant data and minimize unnecessary load and storage. The ability to filter at the source depends on the options provided by the API or system, so available filtering features will vary by integration, giving you flexibility based on your specific data connections.

Will frequent data updates slow down our business-critical operational systems?

Domo is designed to minimize impact on your source systems. You have granular control over ingestion schedules, allowing you to run large data loads during off-peak hours. More importantly, our platform supports "smart updates" and "upsert" methods. This means we only ingest the data that has changed since the last run, rather than performing a full reload every time, significantly reducing the load on your operational systems.

How frequently can I schedule my data to be updated?

The refresh frequency is highly flexible and depends on your business needs and the source system's capabilities. Our standard connectors support schedules ranging from daily or hourly down to every 15 minutes. For scenarios that require data to be updated more frequently, APIs and additional integrations can enable near real-time ingestion. This ensures your decision-makers have access to the most up-to-date information possible within these limits.

.svg)

.svg)

%201.svg)

.avif)

.svg)