Vous avez économisé des centaines d'heures de processus manuels lors de la prévision de l'audience d'un jeu à l'aide du moteur de flux de données automatisé de Domo.

.png)

.png)

.png)

.png)

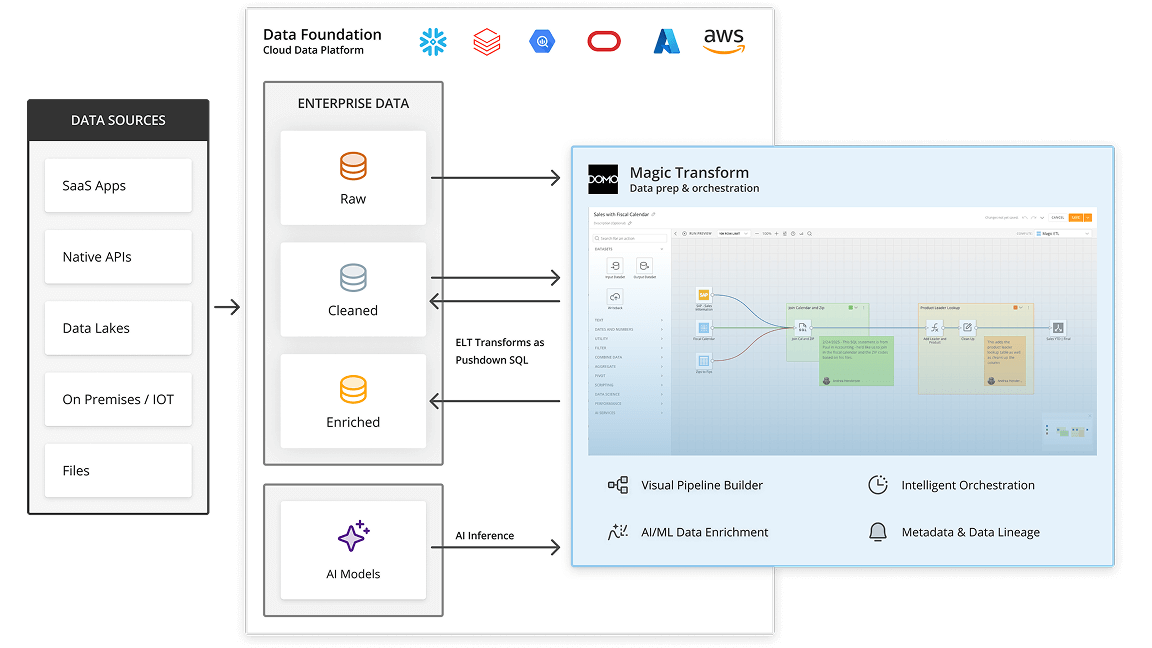

Orchestrate end-to-end automated pipelines using accessible transformation tools, while inherited security and governance work at every step to ensure your data is ready for AI.

Trusted by data-driven companies

Orchestrate end-to-end automated pipelines using accessible transformation tools, while inherited security and governance work at every step to ensure your data is ready for AI.

Trusted by data-driven companies

Refine your entire data ecosystem with Domo's flexible, reliable architecture. Easily clean, transform, and orchestrate governed data pipelines at scale, without ever sacrificing performance.

A more cost-effective approach to data transformation. Tackle complex, high-value work with the advanced SQL, Python, and AI tools you need, while empowering business users with governed, drag-and-drop workflows for their data prep. This dual approach reduces IT backlogs and enables faster, more efficient self-service across the organization

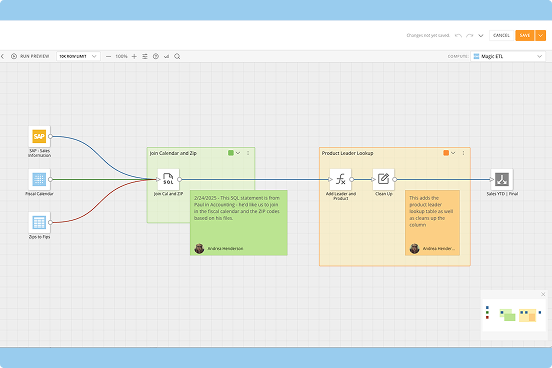

Visually map out and connect every step of your data’s journey. Chain together multiple transformations and actions in an intuitive drag-and-drop interface to build complex pipelines without code. Use built-in sections and notes to document your logic, then extend an intuitive, drag-and-drop experience to business users, all within one governed environment that you oversee.

Write powerful SQL transformations and build complex data pipelines with full control over both the logic and the output method—from full replaces and appends to advanced partitioning and upsert configurations.

Enrich your data pipelines by integrating a full suite of AI services directly into your workflows. Use Domo’s native models, bring your own custom model (BYOM), or plug into external platforms like Hugging Face. This open approach allows you to achieve true data science automation using the exact models you need.

Empower your data scientists with fully integrated Jupyter Workspaces. Enable them to use their preferred interactive notebook environment to explore data, build sophisticated models, and visualize results using Python or R, all without leaving the Domo platform.

Keep your data fresh without breaking the bank. Domo optimizes processing based on data inputs, and can automatically adjust to use different methods—like upsert, partitioning, full replace, or append—to optimize performance by only updating the relevant batches or micro-batches.

Move beyond single transformations and automate entire data pipelines at any scale. Visually build, orchestrate, document and monitor complex, multi-step pipelines that can be saved and reused across the business. This eliminates time-consuming manual processes and ensures your team can reliably deliver enriched data as your business needs grow.

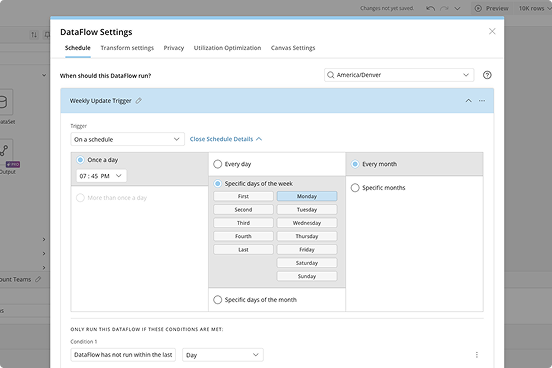

Automate your data pipelines to run on a custom schedule, whenever an upstream dataset is updated, or on-demand via an API. This ensures your data is always fresh and delivered when you need it.

Build complex transformation logic once and save it as a reusable DataFlow. Apply this standardized logic across multiple data pipelines to ensure consistency and dramatically accelerate development.

Gain full visibility into your data ecosystem with an interactive map of how data flows. Instantly understand dependencies between datasets and troubleshoot issues by seeing where data comes from and where it goes.

Proactively monitor the health of your data pipelines with custom alerts. Get notified of successes, failures, or data anomalies via email, mobile, or Slack so you can take immediate action.

Close the loop on your automation by sending transformed, enriched data back into other cloud systems like Salesforce, Workday, or Google Ads to trigger actions, update records, and operationalize your insights.

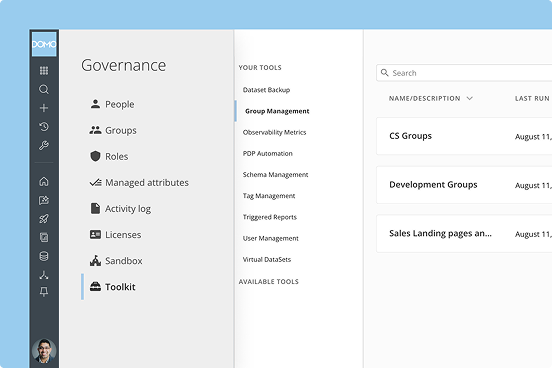

Build unwavering trust by starting with the governance you already have. Domo automatically inherits and enforces security policies from your cloud data warehouse, then layers on our own enterprise-grade tools like data lineage and quality alerts. This provides a comprehensive, end-to-end governance framework that gives you full control and confidence.

Integrate Domo seamlessly into your corporate security framework. Manage user access with your existing single sign-on (SSO) provider, and retain ultimate authority over data privacy with advanced options like Bring Your Own Key (BYOK) for Azure users.

Gain complete control over data access. Enforce security at the row-level by creating dynamic policies, and at the column-level by masking or hiding sensitive data fields. These permissions persist through all transformations, allowing users to securely run DataFlows on the precise data they are authorized to see and use.

Build trust in your data by allowing authorized stewards to certify datasets as official and reliable. Full data lineage provides a transparent audit trail, showing exactly how data was transformed from source to visualization.

Use Domo to analyze automatically captured metadata on content adoption, user activity, and data performance. Track lineage from source to destination and gain deep visibility into pipeline performance, usage, and impact with system-generated DomoStats to optimize your analytics investment.

Proactively ensure the integrity of your data pipelines and automatically monitor datasets for issues like schema changes, row count anomalies, or data freshness. Trigger alerts so your team can catch and fix issues before they impact business decisions.

Maintain a searchable record of all activity within your Domo instance. Track logins, view histories, data changes, and more to simplify compliance audits and gain full visibility into data usage.

Safely manage your content development lifecycle with full versioning and change history for every asset. Enable teams to develop and test in a dedicated sandbox, then promote content to production with a governed release process. With planned GitHub integration, you will be able to sync your Domo projects with your own repositories for even greater control.

Trusted by industry experts

FAQ

While spreadsheets are familiar, they are manual, error-prone, and not scalable. Magic ETL transforms data preparation into a visual, repeatable workflow. You can process millions of rows, save and reuse your logic, and document every step, providing a level of governance and efficiency that spreadsheets can't match.

Absolutely. Domo is designed for both accessibility and power. While Magic ETL handles most use cases with no-code, your data professionals have full access to SQL, Python, and R scripting within MagicETL as well. They can write custom, high-performance transformations and embed them directly into data pipelines, ensuring maximum flexibility for your most complex challenges.

Domo is built on a foundation of centralized governance. You can certify official datasets to guide users to trusted sources. Full data lineage shows exactly how data is being used and transformed. And with Personalized Data Permissions (PDP), you retain granular, row-level control, ensuring users only ever see the data they are authorized to, even during transformation.

A transformation is a single-purpose set of actions, like cleaning a column and joining two datasets. An orchestration is the automation of the entire end-to-end process. In Domo, you orchestrate by chaining multiple transformations together, setting them to run on a schedule or trigger, and adding alerts and notifications, turning a manual, multi-step task into a reliable, automated data pipeline.

Domo's transformation tools are built on a high-performance, scalable architecture. Rather than pulling massive datasets into a memory-limited tool, Domo pushes the processing down to the data warehouse layer, leveraging its power and scalability. This means you can build and run complex transformations on millions or billions of rows with speed and efficiency.

It can, but it doesn't have to. Domo is a full-stack platform that can manage the entire data journey, from ingestion to transformation and visualization, which can simplify your data stack. However, it is also designed to be flexible. You can use Domo to orchestrate and enhance your existing ETL/ELT processes, bringing modern self-service capabilities and governance to your current investments.