Mit der automatisierten Datenfluss-Engine von Domo wurden Hunderte von Stunden manueller Prozesse bei der Vorhersage der Zuschauerzahlen von Spielen eingespart.

With the rapid growth of AI, IoT, and social media, your data ecosystems have to be fast, scalable, and well governed. Organizations are processing massive volumes of data and executing dynamic workflows in real time. That's why data leaders like you are reassessing whether centralized or decentralized data management is the best way to achieve your business goals.

More and more organizations are finding that a hybrid data strategy, combining both centralized and decentralized workflows, offers the best of both. A recent survey on the state of enterprise data governance reports that around 65 percent of data leaders prefer hybrid approaches, including variations of hybrid (29 percent) or federated (36 percent) data governance models, while 36 percent favor a purely centralized approach.

To better understand a hybrid strategy, imagine a commercial kitchen with a professionally managed pantry. Each chef has their own setup—complete with a torch, blender, and other tools they regularly use. But all the ingredients they use—produce, proteins, and seasonings—come from a single, locked, and carefully curated pantry. Before any ingredient goes into a dish, it undergoes meticulous quality control checks.

A hybrid data architecture follows the same approach. A centralized data governance policy is like those standard kitchen ingredients. Using these “ingredients,” your data teams (the chefs) can then prepare different “recipes’ according to their specific areas of expertise (their domains).

In this blog, we compare traditional and hybrid data governance approaches, to help data leaders like you build a strong, dependable architecture that can scale with your business, no matter how fast it grows.

For data teams, it’s important to understand the difference between centralized and decentralized data architectures. It allows them to evaluate which model or combination of models best aligns with the goals of the business.

Let’s look at each of the data models in detail.

A centralized data architecture brings all your data together in a central location. It gathers all your persistent storage, transformation logic, and metadata management into a single, logical platform that's centrally controlled. This is typically an enterprise data warehouse (EDW), a cloud lakehouse (such as Databricks, Snowflake, or BigQuery), or a specialized operational data store. This architecture relies heavily on centralized data storage and a single repository for consistency.

In contrast, a decentralized data model gives individual business units, product teams, or even different geographic regions a way to have their own persistent storage and transformation pipelines. Common examples include domain-oriented data marts, team-specific Snowflake accounts, or data mesh-based data products. These setups often use decentralized data storage and multiple repository instances to move fast and stay agile.

The following table presents a side-by-side technical comparison across dimensions that most frequently determine architectural success or failure for enterprises.

Data teams frequently confuse federated governance with decentralized governance, but they’re actually different concepts.

With a federated model, centralized data governance defines the enterprise-wide standards, policies, and reference architectures. This includes defining semantic terms, metadata formats, and compliance controls. Yet the day-to-day ownership, data product stewardship, and operational execution are handled separately by different teams.

A prime example is the data mesh architecture. Data mesh treats data as products owned by specific teams but explicitly mandates a federated computational governance layer that covers data history across teams, standardized interfaces, and policy-as-code enforcement. This is why most working data mesh systems use a federated approach rather than being truly decentralized.

Companies are backing this up with real investment. The global data mesh market was valued at roughly US$1.5 billion in 2024 and is forecasted to surpass US$3.5 billion by 2030. Large companies clearly recognize that federated approaches deliver the right balance of central control over data definitions (centralized semantic governance) and individual teams owning their own data (decentralized data ownership).

Due to the latest data trends, most modern ecosystems no longer fit strictly into centralized or decentralized frameworks. Many companies attempting to shift from centralized to decentralized quickly run into fragmented governance and compliance gaps, highlighting why a pure migration rarely succeeds without hybrid elements.

Conversely, teams moving from decentralized to centralized structures often face bottlenecks and lost domain agility. That's why opting for one extreme leads to notable trade-offs, especially in these areas:

These architectural issues surface routinely in feature-store initiatives, embedded analytics programs, and regulatory reporting cycles. Taken together, they demand a hybrid architecture that simultaneously delivers:

A hybrid data strategy merges centralized and decentralized data models. Typically, it brings together data management functions, such as security, quality, definitions, and compliance. The hybrid data strategy decentralizes data access and execution, enabling teams with self-service analytics, domain-specific apps, and local exploration.

This is achieved through a logically centralized or virtual semantic layer that acts as the single contract between raw sources and domain-specific applications. At the same time, physical storage and compute remain distributed.

This hybrid shift is happening fast because the adoption of self-service analytics that make decentralized execution practical is exploding. In fact, the global self-service BI market is projected to grow to $26.5 billion by 2032, compared to roughly $8 billion in 2025. This growth is driven primarily by the push to make data available to everyone without sacrificing control. In other words, the market itself is voting for a hybrid model.

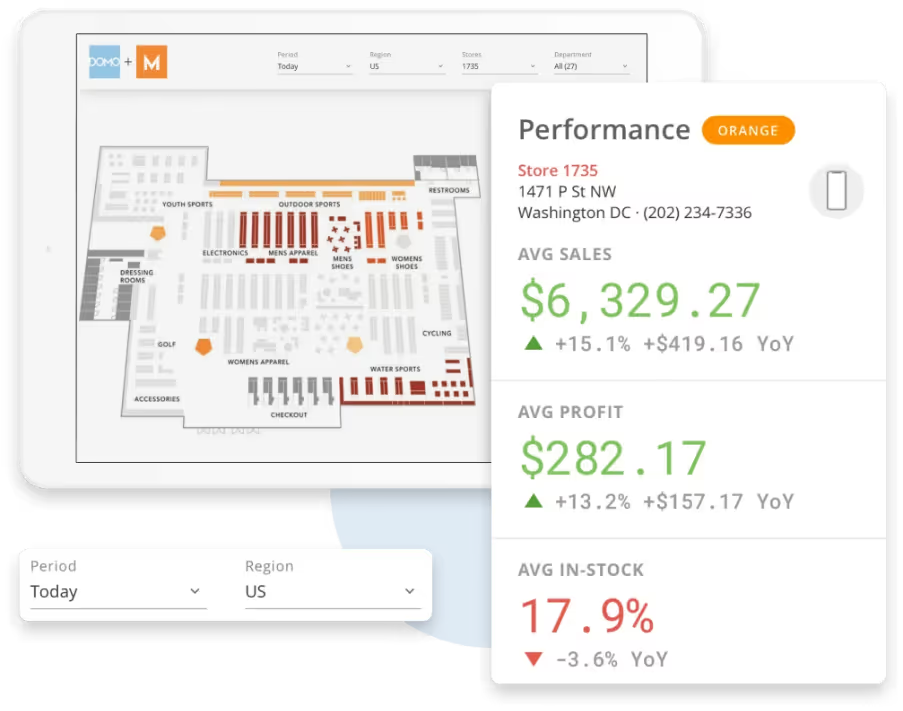

Platforms like Domo speed up this adoption by providing self-service BI capabilities that scale across large user bases while maintaining centralized control over data quality and compliance.

Going back to our original analogy, it’s the shared commercial kitchen in action:

This model gives you:

You can summarize a hybrid data architecture model in four core layers:

Together, these layers give organizations a way to balance scalability with control. For instance, in DORA-compliant financial institutions, enterprise governance ensures regulatory audit trails, while decentralized consumption enables real-time risk analysis.

Here are six practical steps data leaders can use to roll out a hybrid approach effectively:

Clarify which decisions, policies, and processes must stay centrally owned and which ones you can safely delegate to business units. Start with a direct conversation among leadership. Answer one simple question: What must stay in the center so we don’t break compliance or trust, and what can we safely hand to the people who actually live in the data every day?

Consider using an RACI (Responsible, Accountable, Consulted, and Informed) matrix that:

For instance, TTCU centralized loan decision data but allowed branch-level teams to use dashboards for local insights, demonstrating a clear division of responsibilities between centralized policy and decentralized execution. As a result, the credit union now processes about $4 million in loans per loan officer per month, up from $400,000 a month per loan officer before.

Establish shared standards for data quality, security, metadata, and access across all teams. In practice, this means publishing:

For instance, Community Fibre consolidated its previously siloed systems into Domo, giving all 600+ employees access to trusted, governed data. Their Chief Information Officer, Chris Williams, says that “Domo has been an absolute cultural change for us.”

Appoint data stewards within each domain and connect them to a central governance. These stewards act as the bi-directional interface and translate enterprise policies into domain-specific practices, like tagging rules for marketing campaign data. They also surface new requirements, such as domain-specific retention periods, back to the central governance team for approval and propagation.

Make governed data sets discoverable regardless of where someone sits. Ensure visibility, lineage tracking, and discoverability across decentralized data sources. The data catalog must:

For instance, Falvey Insurance Group used Domo to bring all of its data together into one unified system, making it accessible to anyone across the company.

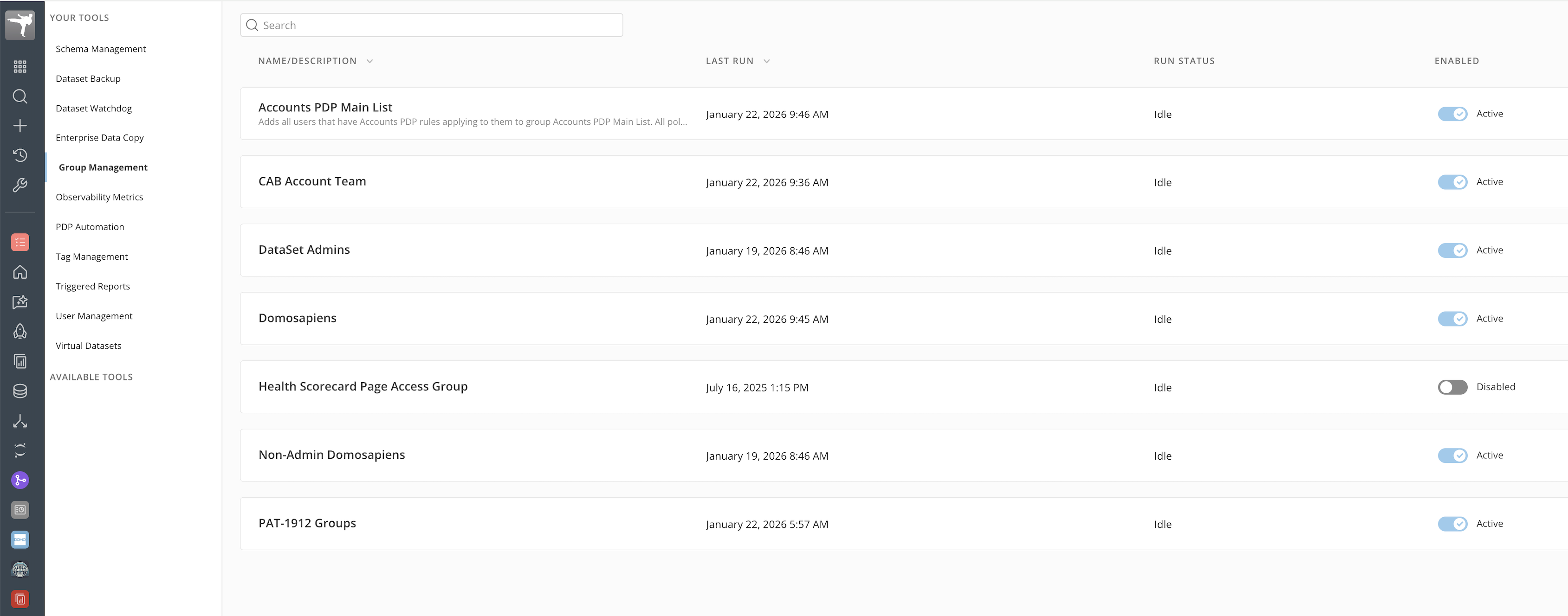

Use built-in tools to enforce quality checks, certification workflows, access reviews, and compliance alerts automatically. Modern platforms execute these controls at the integration layer via policy engines that trigger:

This eliminates hands-on gatekeeping while producing immutable audit trails required by regulations. For instance, Domo’s advanced data governance offers automated alerts and compliance checks, reducing manual enforcement and supporting automation.

Track metrics like adoption, cycle time reduction, governance coverage, and business impact. Adjust the balance as your organization matures. Leading teams can monitor specific indicators, such as:

These indicators are reviewed periodically by the central governance team to adjust metric thresholds as new use cases emerge.

It's no longer about “centralized or decentralized.” The question every CDO, analytics VP, and branch manager is asking right now is: “How fast can my people get answers they actually trust?”

Domo provides a shared kitchen for implementing trustworthy hybrid data strategies, combining centralized data accuracy with decentralized data access for teams. With Domo, organizations get:

If you’re ready to build the data strategy that increases trust and speed simultaneously, learn more about Domo’s data governance and put a hybrid data strategy to work today.