The 5 Key Components of a Data Pipeline

Companies and organizations that work with data have to manage the enormous amount of data they collect in order to obtain insights from them.

Big data volume, velocity, and variety can easily overwhelm even the most experienced data scientists. That is why organizations use a data pipeline to transform raw data into high-quality, analyzed information.

A data pipeline has five key components: storage, preprocessing, analysis, applications, and delivery. Understanding the five key components of a data pipeline helps organizations work with big data and use the insights they generate.

In this article, we will break down each component of a data pipeline, explain what each component archives, and the benefits of a powerful data pipeline integration in business intelligence.

Why implement a data pipeline?

A data pipeline helps organizations make sense of their big data and transform it into high-quality information that can be used for analysis and business intelligence.

No matter how small or large, all companies must manage data to stay relevant in today’s competitive market.

Businesses use this information to identify customers’ needs, market products, and drive revenue.

Data pipeline integration is a huge part of the process because it provides five key components that allow companies to manage big data.

The five components of a data pipeline

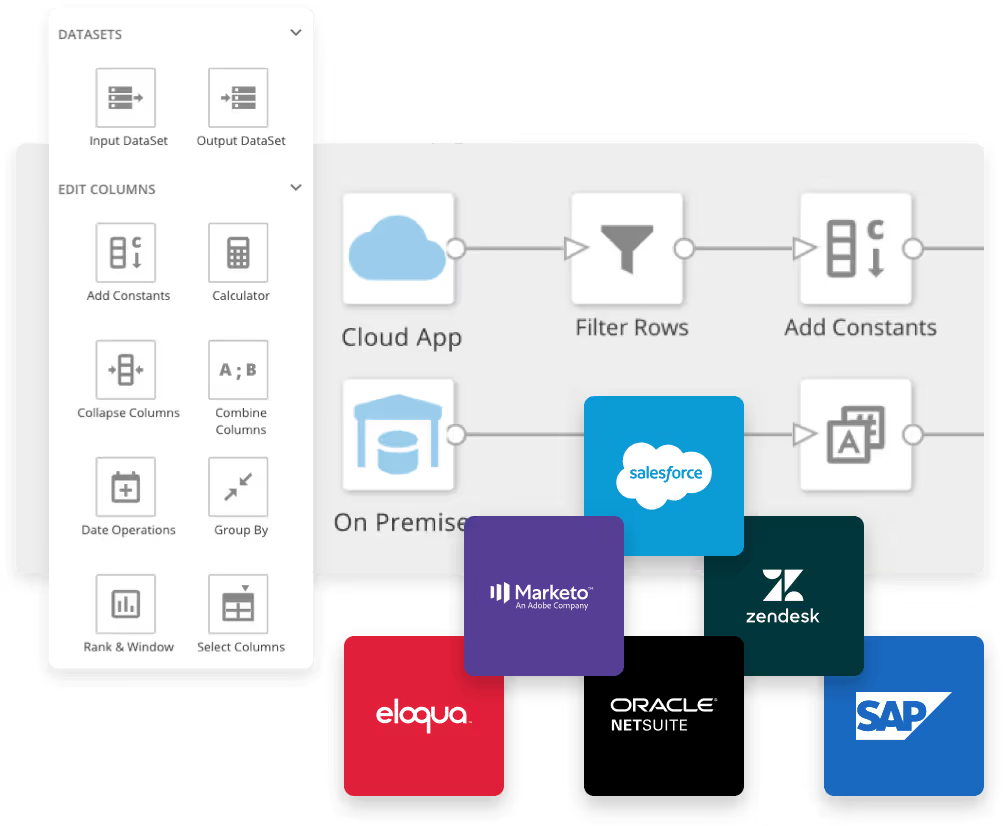

A data pipeline has several essential components that work together to move, transform, and deliver data efficiently. While our original framework included storage, preprocessing, analysis, applications, and delivery, it’s helpful to also consider other important aspects like data ingestion, orchestration, and monitoring. Here’s a breakdown of these key components and how they support a strong data pipeline.

1. Data Sources and Ingestion

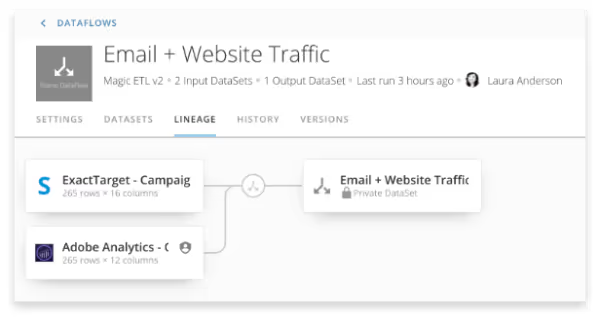

This first component includes both the origin points of your data (databases, APIs, log files, streaming sources) and the ingestion process — which is how data is collected and pulled into the pipeline. Effective ingestion ensures that data is complete, timely, and ready for downstream processing.

2. Preprocessing and Transformation

After data is ingested, it needs to be cleaned, standardized, and transformed into a usable format. This includes correcting errors, consolidating formats, enriching data, and tagging it for specific types of analysis. By preparing your data properly, you set the stage for accurate insights and smooth analysis later.

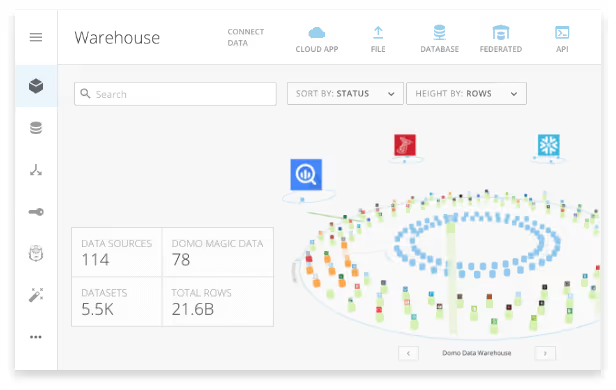

3. Storage

Once preprocessed, data is stored securely in warehouses, data lakes, or specialized databases. Storage provides scalable, reliable access to data so teams can analyze and visualize it whenever needed.

4. Analysis and Applications

This component turns your raw data into actionable insights. By using analytical tools, BI applications, and models, you can uncover trends, build dashboards, and generate reports that guide decision-making and drive strategy.

5. Orchestration, Monitoring, and Delivery

The final component ties it all together. Orchestration automates and manages the sequence of tasks in your pipeline, ensuring everything runs in the right order. Monitoring and logging track performance and catch errors early. Finally, delivery ensures insights are shared with the right people through dashboards, reports, or embedded tools — so data can drive action across the organization.

The benefits of a data pipeline integration

The five components of a data pipeline have many benefits, which allow organizations to work with big data and easily generate high-quality insights.

A strong data pipeline integration allows companies to:

- Reduce costs: By using a data pipeline that integrates all five components, businesses can cut costs by reducing the amount of storage needed.

- Speed up processes: A data pipeline that integrates all five components can reduce delivery time to make valuable information much faster.

- Work with big data: Because big data is difficult to manage, it’s important for companies to have a strategy in place to store, process, analyze, and deliver it easily.

- Gain insights: The ability to analyze big data allows companies to gain insights that give them a competitive advantage in the marketplace.

- Include big data in business decisions: Organizational data is critical to making effective decisions that drive companies from one level to the next.

Businesses can work with all their information and create high-quality reports by using a data pipeline integration. This strategy makes it possible for organizations to easily find new opportunities within their existing customer base and increase revenue.

How to implement a data pipeline

By integrating all five components of a data pipeline into their strategy, companies can work with big data and produce high-quality insights that give them a competitive advantage.

The first step to integrating these components is to select the right infrastructure. Businesses can handle big data in real-time by choosing an infrastructure that supports cloud computing.

Next, they need to find a way to deliver information in a secure manner. By using cloud-based reporting tools, companies can ensure the most updated data is being used and all employees have access to updated reports.

Finally, companies should consider how to integrate their existing infrastructure with any new solutions. For example, if an organization currently requires a traditional data warehouse with required integrations, it may be difficult to implement a new cloud-based solution.

When implemented successfully, a data pipeline integration allows companies to take full advantage of big data and produce valuable insights. By using this strategy, organizations can gain a competitive advantage in the marketplace.

Conclusion

the benefits it brings to your business! start building your data tools and expertise, any organization can build an effective data pipeline that drives success. SoThe five components of a data pipeline—data sources and ingestion, preprocessing and transformation, storage, analysis and applications, and orchestration, monitoring, and delivery—form the foundation for turning big data into high-value insights. When integrated correctly, these components empower organizations to make smarter decisions, act quickly, and stay ahead of the competition. With the right

Domo transforms the way these companies manage business.