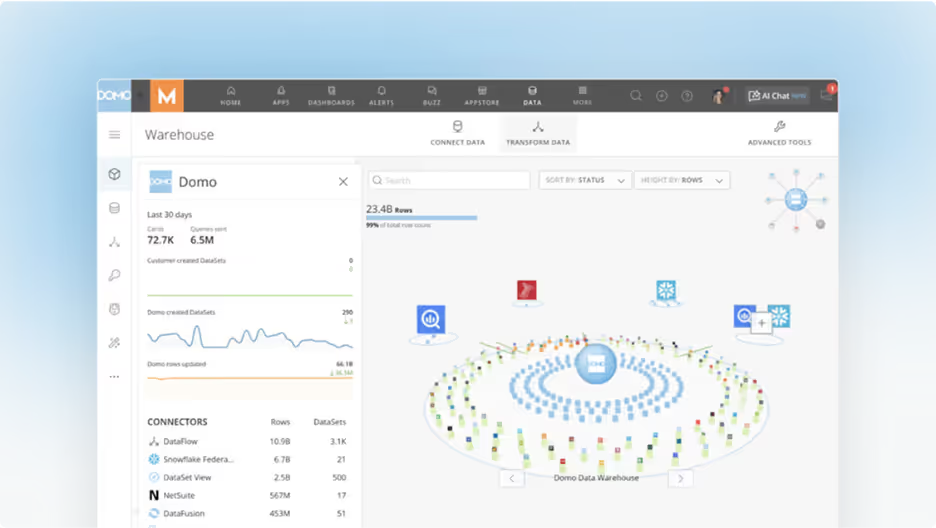

Se ahorraron cientos de horas de procesos manuales al predecir la audiencia de juegos al usar el motor de flujo de datos automatizado de Domo.

If an AI initiative fails, it's not because the underlying LLM model is weak. It's usually because of a fragile AI data pipeline. Gartner’s latest forecast suggests that 60 percent of projects will flatline by 2026, not because of poor design, but because data isn't ready for AI—it's late, messy, or biased.

This issue isn’t new. In 1996, MIT asked companies about data quality, and over 60 percent said they were drowning in poor data. Fast-forward three decades. Data lakes have become “oceans,” and we have unprecedented computing power. But one toxic row can still trigger hallucinations, lead to overfitting, or introduce biases that sink the company’s reputation, and maybe more importantly, budgets.

While we now have petabytes at our fingertips and enormous processing capacity, data quality management still remains the gatekeeper. And if you skip practices like context, lineage, or enterprise data governance, even state-of-the-art models can produce unreliable outputs.

According to Lee James, senior partner at Domo, “Pipelines are no longer passive plumbing. They’re alive. AI workloads are dynamic. Enrich. Label. Contextualize. Repeat. Or die.”

This guide is the practical playbook for engineers and architects to build AI data pipelines that survive production, not just demos. Let’s dive in.

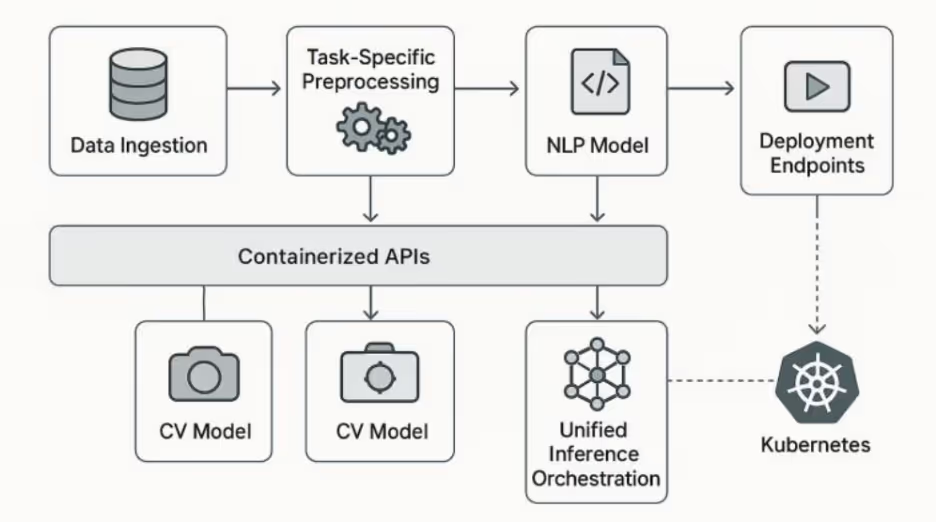

An AI data pipeline is an end-to-end, unified infrastructure that continuously moves data from its source to a production model. It ingests raw inputs from diverse sources, applies validation and transformation rules, prepares structured features for model training and inference, executes deployment workflows, and the incorporates live performance feedback to maintain accuracy and reliability across the full AI lifecycle.

Traditional ETL pipelines were built to bring structured data into warehouses to support scheduled reports. You’d run them nightly, generate dashboards, and call it a day.

Contrast this with AI pipelines, which are designed for unstructured and real-time data. They support live inference, adapt to data drift, and operate continuously without waiting for batch updates.

“With traditional pipelines, they were built for dashboards,” James explains. “But AI pipelines must be designed for decision-making with continuous enrichment.”

That shift requires a complete rethinking of how we architect and execute our data systems.

Here are the five core stages of an AI data pipeline. If you skip any of these stages, your AI pipeline is at risk of collapsing in production.

To support these stages, your pipeline typically includes:

While most data engineers can integrate tools, the real challenge lies in making the pipeline adaptable. Data schemas shift. Sources disappear overnight. Models drift. Rigid systems break under this pressure. But a smart one evolves.

An effective AI data pipeline design is layered, tightly orchestrated, heavily governed, and constantly observed. Miss one layer, and you introduce risk into production systems. Here’s how the layers stack up in data pipeline architecture and why each one matters:

We can view the ingestion layer as quality gate number one.

In the real world, your data sources can be spread all over the place: NoSQL clusters, REST APIs, thousands of IoT devices scattered across continents, SaaS exports, log streams, and even unstructured PDFs from vendors.

There are two main strategies to bring data into the pipeline:

As a data engineer, you can't let low-fidelity data slip through at this stage.

How a simple check can save your business from costly errors

Let’s run a scenario. Imagine your data pipeline ingests 5 million records overnight. Then, an upstream API quietly changes its payload, maybe adding a new field or altering a data type. If you skip validating the schema, some of those rows could end up malformed. So even though the job succeeds and data lands, your models now begin to retrain using corrupted inputs.

If caught during ingestion, a schema validator detects the issue and triggers an alert. It’s resolved in under ten minutes. If missed, it leads to several days of emergency debugging, rollback, retraining, and an unhappy VP asking why the fraud model just approved $2M in bad transactions.

With AI, you can strengthen the ingestion layer by using tools that automatically pull out key entities, mask sensitive data, and detect early signs of drift. Recent studies have shown that incorporating AI at various stages of the pipeline improves data throughput by 40 percent and reduces latency by 30 percent.

Storage isn’t just a place to park data. It defines your scalability, cost curve, and enterprise data governance posture from day one.

As a data engineer or architect, you can choose from different storage options:

You can store raw data and process it on demand, or pre-process it and materialize it for instant access by end systems. Both strategies are valid, depending on latency requirements and workload priorities.

Build your data pipeline architecture using a modular approach. Use microservices, serverless functions, and event-driven triggers. Let each layer scale on its own without dragging the rest down.

Governance also kicks in at this stage. Version every file, catalog metadata, and track lineage end-to-end, so every pipeline action remains fully explainable. Or, as James warns, “Automate transformations without governance? You just built a black box. Good luck explaining it to legal.”

The transformation layer is the processing core that takes raw and messy input data and turns it into model-ready features. It's where we clean, normalize, encode, scale, reduce noise, and enrich data for accurate models across training and inference.

Using AI-assisted data transformation techniques, we can further speed up the process. These tools can surface correlations, flag outliers, and suggest transformations, minimizing manual effort.

Feature stores can help prevent data leakage and save your team time and resources

To make feature engineering even more efficient, data teams are now using feature stores. It’s a central place where all the features your models rely on are stored and versioned. This way, you don’t have to deal with scattered files or worry about which version was used in production.

Feature stores also offer guardrails against data leakage, meaning you're not “accidentally training on tomorrow’s values.”

Consider a real-world example: A churn prediction model goes live with a reported accuracy of 90 percent in staging. But it fails in production, giving too many false positives. Upon investigating, the team discovers a critical flaw. The feature, days_since_last_login, was pulled in real time during training, not from a historical state. The model mistakenly learned that “users who haven’t logged in yet are about to churn.”

This failure could have been prevented with a feature store. By enforcing point-in-time (historical snapshots) consistency, every training row uses accurate feature values as they existed at that exact timestamp. As a result, the team saves time on rollback and retraining.

Orchestration is the operational spine of the AI data pipeline. It manages scheduling, coordinating, and monitoring, from data ingestion through to model deployment. Without it, the entire system breaks down.

Teams can use data orchestration tools like Airflow, Dagster, Prefect, or AWS Glue to:

But orchestration without observability? It's just scheduled chaos. You have to get eyes on pipeline metrics, such as:

With AI-assisted monitoring, pipelines can flag bias, critique model outputs, and catch hallucinations before customers do.

As James puts it: “We use AI to watch the pipeline and the models in real time. It’s AI monitoring AI. Sounds meta, but it works.”

Add automated alerts, intelligent retries, and self-healing logic. Suddenly, your AI data pipeline has a pulse and a brain.

The serving layer makes the trained model available for real-time or batch predictions. Common tools include TensorFlow Serving, KServe, and SageMaker, which offer:

But shipping to production isn’t the win. Drift is waiting. Data evolves. People behave differently. Accuracy erodes. This is where data quality management becomes critical, and MLOps becomes your safety net.

To manage production performance:

Tools like Prometheus and Grafana become your control room, and techniques like SHAP and LIME provide explainability. Bias scans and enterprise data governance checks keep you compliant.

A tight monitoring regimen results in models that learn on the job, stay reliable, and evolve with the business, not fragile experiments that break the first time reality changes.

Your model is only as sharp as its weakest record. With a single null value, you can end up with a bad prediction. One skewed sample? Suddenly, you’ve got a legal headache.

These are the five core pillars of data quality management that a data team must never ignore:

That’s the basics. Researchers list more than 25 data quality dimensions. Some frameworks go as high as 41 factors that can influence a pipeline. We can't list all of them here but pick the ones that fit your use case and fix your data.

AI-assisted techniques can also handle the manual grunt work for you. They can spot data anomalies and trigger fixes before production feels the pain. But remember, automation has blind spots. “Trust is everything,” James reminds us. “Humans stay in to fix what AI can’t. Because garbage in means garbage out.”

His point underscores why it’s essential that humans stay in the loop to perform manual review for high-stakes data, catch the nuance AI misses, validate corrections, and hunt hidden data bias.

“Data governance is COOL now.”—Juan Sequeda, data.world

Every data set, every transformation, every model output must be fully traceable—no exceptions. Tell stakeholders or auditors you can’t explain how AI works, and you’ve just ended the meeting—and possibly your role.

These are the two pillars that make enterprise data governance possible in your data pipeline:

When stewardship and contracts align, teams and stakeholders can rely on the data. This eliminates any confusion or uncertainty regarding responsibility.

James reinforces this point, noting that “Governance forces explainability. Understand why and how decisions are made rather than simply saying AI did it.” In other words, governance is more than just bureaucracy. It’s the tool that keeps AI transparent, auditable, and defensible.

Also, regulations like GDPR, CCPA, HIPAA, and the EU AI Act aren’t optional. Data teams should bake them into their AI data pipeline from day one. Use governance-as-code and embed rules directly in your DAGs and configs.

But there are plenty of roadblocks in this journey, like:

The solution is to start small. Introduce lineage tracking and role-based access first. Prove value through fewer incidents and faster audits. Once established, you can scale to enterprise-wide policy engines that automate enforcement.

If you overlook this layer, even a single compliance failure can lead to regulatory scrutiny and difficult explanations. But, if you build it right, governance isn’t overhead. It’s insurance.

James’s rule is “Keep it nice and small; build frameworks that can be run safely but consolidated for efficiency.” In other words: Small. Safe. Smart. Build it modular to run anywhere, scale everywhere.

A modular data pipeline architecture means future-proofing your AI infrastructure. Every layer (Ingestion, Transformation, Serving) is implemented standalone. Using hybrid cloud environments, you can run modular pipeline processes in parallel or incrementally.

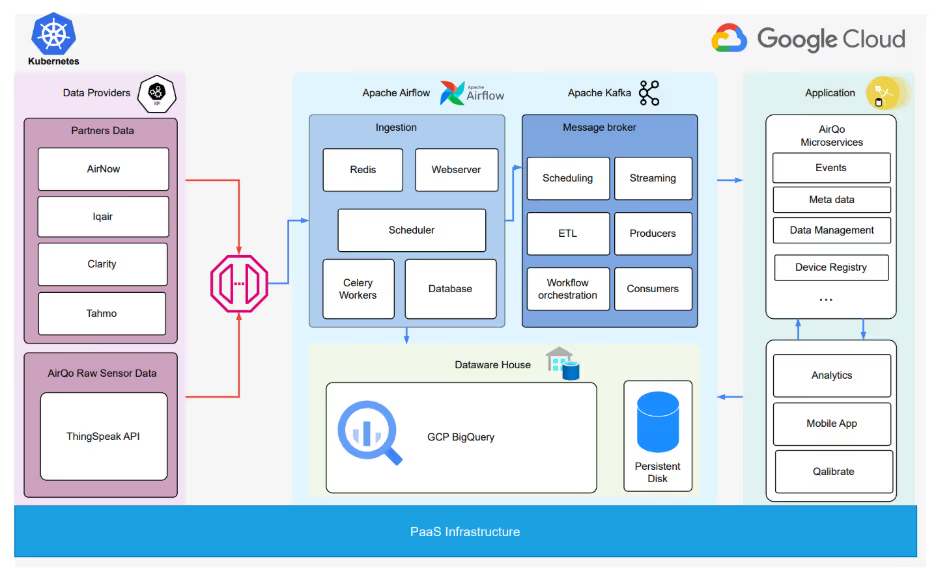

For example, the AirQo project in Africa processes millions of air-quality sensor readings every month. They run a modular, cloud-native data pipeline powered by Airflow for orchestration and Kafka for streaming. The pipeline stays scalable, compliant, and resilient under real-world load.

As the pipeline scales, you can track each module or layer through observability dashboards. Teams can track throughput, end-to-end latency, and cost per job in real time. If performance metrics begin to deviate, they can adjust Spark executors or throttle Kafka partitions before service level agreements or budgets are affected.

We’re already seeing multi-cloud pipelines with deep learning-driven autoscaling. Resources can shift dynamically across AWS, GCP, and Azure with low-latency inference at a global scale.

Every dependable and effective data ecosystem begins in chaos with notebooks, cron jobs, and ad hoc scripts. They might deliver quick wins. That is, until scale hits and the pipeline starts to fail. There’s no lineage, no ownership. One bad deployment and the entire system can begin to collapse.

You don’t flip a switch and suddenly have enterprise-grade status. You build incrementally. As James advises, “Start small. Use a small team to gradually and calmly piece everything together.”

Juan Sequeda’s ABCDE framework gives a suitable roadmap for building governed data products:

This isn’t a solo mission. It requires cross-functional ownership from engineers, analysts, and scientists, all actively engaged. Continuous feedback, shared alerts, and joint retrospectives are essential.

If you do it right, you can go from fragile hacks to auditable, resilient systems that scale without failure.

Modern AI pipelines won’t require manual intervention. They’ll be autonomous, predictive, and self-optimizing.

Imagine:

AI-driven orchestration already does this. It spots bottlenecks, adjusts resources, and heals drift. This is where reactive becomes predictive and proactive. But don’t mistake it for AGI just yet. We’re at smart automation.

James captures the current reality: “AGI? Not today. Efficiency. Enrichment. Real value. That’s where today’s wins are.” His point is a good reminder that today’s breakthroughs are practical, measurable gains and not leaps of science fiction.

With AI’s assistance, the future pipelines will experience lower latency, rock-solid reliability, and achieve compliance that doesn’t slow you down. They’ll adapt quickly, give faster insights, and ensure zero trust gaps.

Building an enterprise-grade data pipeline requires trust at scale through accurate and traceable data, shared team ownership, and reliable AI outcomes that drive real business results.

Domo provides the unified platform that teams can trust to build strong, resilient data pipelines. It offers:

Domo's platform handles the heavy lifting for real-time AI workloads with easy ingestion, automated dataflows, and built-in governance. It lets you zero in on what matters, delivering business value through pipelines that are rock-solid, traceable, and ready for enterprise scale.

Every scalable AI initiative begins with clean, contextualized, and governed data. Learn more in The Best AI Starts with Clean Data and the AI Readiness Guide.